I’ve moved to my own site, http://sphavens.com – please change your bookmarks and RSS feeds. Thanks!

How much should I unit test?

September 29, 2009 — Scott HavensA few months ago I was debating with a friend over IM about how far one should take unit testing. He was of the opinion that one should only bother with unit tests on complex methods, and that unit testing the simple stuff was a waste of time. I pressed him on just how simple an app or a method had to be before it was too simple to bother unit testing, and he responded with the simplest example he could think of: “After all, you don’t really need to test ‘Hello, Worl!'” [sic].

That was the end of that argument.

Virtualization in a mobile environment

September 22, 2009 — Scott HavensLast week a colleague was taking the opportunity to revisit his development environment. In light of the availability of Windows Server 2008 R2, Win7, and Beta 1 of Visual Studio 2010, Eric was interested in pursuing a heavily virtualized setup. As he knew I am a proponent of doing all development (including the IDEs) in virtuals and that I had converted to a Hyper-V-based development environment, we started discussing what approach he might take. Eric travels a lot, so he’s opted to work entirely on mobile devices. His primary notebook is a beast: Core 2 Quad, 8GB RAM, 17″ 1920×1200 display, 1GB nVidia Quadro FX 3700m, all in a svelte 11 pound package (including power supply). You’d think it’d be great for the developer who wants to virtualize, or the conference presenter who wants to demonstrate the latest and greatest to a crowd.

Unfortunately, Microsoft’s professional-level offerings for virtualization on notebooks are nonexistent.

At first, Eric wanted to go the Hyper-V R2 route. He installed Server 2K8 R2, installed VS2010 in the host partition, and TFS2010/SQL2K8/MOSS2007 in virtuals. He had heard me complain about the graphics performance problems with Hyper-V in the past, but wanted to see for himself. Sure enough, Visual Studio ran quite slowly. However, as it was his first time using the beta, he didn’t know if the lack of speed was just because it was a beta, or if Hyper-V was the cause. Temporarily disabling the Hyper-V role caused a severalfold speedup in the application, going from painful to pleasant. Permanently fixing this would require running XP-level (or, ugh, VGA) drivers for his top-end video card. On top of this, Hyper-V completely disables all forms of sleep in Windows. This was not an acceptable solution to a mobile user.

Frustrated, he decided to resort to Virtual PC. It’s free and easy to use, but that idea was shot down when he realized that not only does Virtual PC not support multiprocessor guests (annoying, but something he could cope with), but it won’t run 64-bit guests either. Given that many of the latest Microsoft server releases (including Windows Server 2008 R2 itself) are 64-bit only, this was a dealbreaker.

What’s left? I suggested VMware Workstation 6.5. It supports multicore guests, 64bit guests, and letting the host computer sleep, all without painful graphics performance. It’s not free, but if you’re looking to get the job done, it’s the best solution. If you want free, VirtualBox is a good option, although not quite as polished as VMWare Workstation. If you want Microsoft, you’re out of luck.

Eric went with VMware Workstation.

Finally, I should note that Intel is releasing the next round of mobile cpus in 1Q2010. As they’ll be Nehalem chips, many of them should have improved hardware virtualization support that matches what we can get on the desktop today. While it won’t fix the Hyper-V sleep mode problem, it will at least alleviate the Hyper-V graphics performance problem.

Effects-based programming considered harmful (Or: How I learned to stop worrying and love functional programming), part III

September 15, 2009 — Scott HavensPart I and Part II are also available.

As I mentioned in part I, flagging a method as static in C# isn’t sufficient to eliminate all side effects. It eliminates a single (albeit common) class of side effects, mutation of instance variables, but other classes of side effects (mutation of static variables, any sort of IO like database access or graphics updates) are still completely possible. Effects are not a first-class concern in C#, and so the language as it stands today has no way to guarantee purity. Thus, while it’s helpful to indicate intent and minimize your potential effect “surface area” by flagging a method as static, it’s not a guarantee that your function is pure. Certainly, one can code in a functional style in C#, deriving many of the benefits just by indicating intent and minimizing potential effect “surface area” via flagging methods as static, but it’s still not the same as having a compiler guarantee that every method you think is pure actually is. It’s easy for someone who doesn’t understand that a particular method was intended to be a pure function to change your static method to make a database call, and the compiler will be happy to comply.

Should C# be changed to raise effects to first-class concerns? I don’t think most developers are used to differentiating between effects-laden code and code without effects, and may balk at any changes to the language that force them to. Further, I fear backwards-compatibility concerns may limit what one could change about C#; instead of flagging a method as having effects (think the IO flag seen in some functional languages), it may be easier to invert the idea and flag methods as strictly pure. Methods with this flag would be unable to access variables not passed in as input parameters and restricted in what kinds of calls they could make.

I don’t know yet what the right way to take is, and I don’t think anyone else does either. I do know people like Joe Duffy and Anders Hejlsberg are contemplating it on a daily basis. Something will need to be done; as developers will deal more and more with massively parallel hardware, segregating or eliminating effects will go from being a “nice to have” aspect of one’s programming style into an explicitly controlled “must have” just to get software that works.

Effects-based programming considered harmful (Or: How I learned to stop worrying and love functional programming), part II

September 15, 2009 — Scott HavensPart I of this series can be found here.

My code didn’t improve just because I was giving up on OOP principles. I was simultaneously adopting functional principles in their place, whether I realized it at first or not. I found several benefits in doing so:

Testability

Consider a unit test for a pure function. You determine the appropriate range of input values, you pass the values in to the function, you check that the return values match what you expect. Nothing could be simpler.

Now consider testing a method that does the same job, but as an instance method. You’re not just considering the input and output values of a function with a simple unit test anymore. You either have to go through every step of the method and make sure you’re not touching any variables you’re not supposed to, or change the unit test to check every variable that is in scope (i.e. at least every method on that object), if you’re being comprehensive. And if you take a shortcut and don’t test every in-scope variable, anyone can add a line to your method that updates another variable, and your tests will never complain. Methods that perform external IO, such as reading values from a database to get their relevant state, are even worse — you have to insert and compare the appropriate values in the database out of band of the actual test, not to mention making sure the database is available in the same environment as the unit tests in the first place.

When software is designed in such a way that side effects are minimized, test suites are easy to write and maintain. When side effects occur everywhere in code, automated testing is impractical at best and impossible at worst.

Limited coupling of systems

When I take the idea of eliminating side effects to their logical conclusion, I end up with a lot of data objects that don’t do anything except hold state, a lot of pure functions that produce output values from input parameters, and a mere handful of methods that set state on the data objects based on the aggregate results from the pure functions. This, not coincidentally, works extremely well with loosely coupled and distributed systems architectures. With modern web services communicating between systems that were often developed independently, it’s usually not even possible to send instance methods along with the data. It just doesn’t make sense to transfer anything except state. So why bundle methods and state together internally if you will have to split them up again when dealing with the outside world? Simplify it and keep it decoupled everywhere.

Decoupling internally means that the line between ‘internal’ code and ‘external’ code is malleable, as well. This leads me to another benefit of eliminating side effects:

Scalability (scaling out)

If all you’re doing is passing state from one internal pure function to another internal pure function, what’s stopping you from moving one of those functions to another computer altogether? Maybe your software has a huge bottleneck in the processing that occurs in just one function that gets repeatedly applied to different inputs. In this case, it may be reasonable to farm that function out to a dedicated server, or a whole load balanced/distributed server farm, by simply dropping in a service boundary.

Concurrency (scaling up)

One of the keys to maximizing how well your software scales up on multi-CPU systems is minimizing shared state. When methods have side effects, the developer must perform rigorous manual analysis to ensure that all the state touched by those methods is threadsafe. Further, just because it’s threadsafe doesn’t mean it will perform well; as the number of threads increases, lock contention for that state can increase dramatically.

When a method is totally pure, on the other hand, you have a guarantee that that method, at least, will scale linearly with your hardware and the number of threads. In other words, as long as your hardware is up to snuff, running 8 threads of your method should be 8 times faster; you simply don’t have to worry about it. On top of that, pure methods are inherently threadsafe; no side effects means no shared state in the method, so threads won’t interfere with each other. While you probably won’t be able to avoid shared state completely, keeping as many functions pure as possible means that the few places that you do maintain shared state will be well-known, well-understood, and much easier to analyze for thread safety and performance.

For all these reasons, I found adopting a functional style to be a huge win. However, not all is wine and roses for C# developers who have learned to love functional programming…

(to be continued in part III)

Effects-based programming considered harmful (Or: How I learned to stop worrying and love functional programming), part I

September 4, 2009 — Scott HavensA while back I was refactoring some C# code, and I noticed that, in the process, I was blindly converting as many instance methods to static as I feasibly could. My process was this:

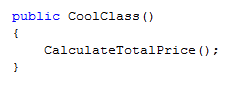

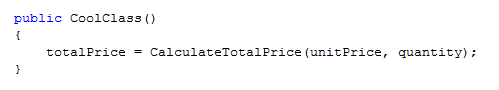

- Stumble across instance method. This method would run an algorithm using values stored in the object before setting a different value on the same object; it usually returned void or some sort of success flag. For example:

- Convert to static method that accepts the necessary ‘before’ state as parameters and returns the results.

- Alter the calling method to both pass in the ‘before’ state and set the ‘after’ state of the object according to the value returned from the newly-staticized method.

From

to

- Feel sense of satisfaction that I’ve “fixed” the software somehow.

- Rinse and repeat, sometimes moving the actual setting of particular object state up the call tree several times by the time I’m done.

But is my sense of satisfaction justified? Certainly in an example as simplistic as the one I pasted above one could argue that I actually made the program harder to read. I had just been doing it because it felt right, not because I had logical reasons for it. And that’s no way to write code.

Surely, I needed to sit back and think about what I was doing.

My first realization was that I wasn’t preferring static methods, per se; I was preferring methods that had no side effects. I was merely converting instance methods to statics in order to guarantee that no instance variables were being set, a common side effect in day-to-day imperative programming. External IOs (e.g. accessing a database) and setting static variables (e.g. in rarely-used singletons) are still possible side effects in static methods. I considered both of those with the same distaste, or at least wariness. However, I had already ghettoized external IO and minimized singletons as much as possible, each for their own reasons; as those had already been addressed before I went on my latest refactoring rampage, only the instance methods remained to draw my ire.

Eliminating or minimizing side effects via converting instance to static methods had a corollary: I had to separate object state from the methods operating on that state. No longer would I have objects that simply knew what do with themselves. Before, I would have a void method call that did calculations and set state on its object (e.g. the original CalculateTotalPrice() method); now I had some functions that only performed calculations on input values (the new CalculateTotalPrice), and other methods that only set/retained state (the new CoolClass() constructor). I didn’t even need the two kinds of methods to exist on the same object. I was giving up on one of the most basic principles of object-oriented programming.

And you know what? It made the code better.

(to be continued in part II…)

Why is my Windows Home Server backup so slow?

September 2, 2009 — Scott HavensThe other night I was on the computer later than usual, late enough that my WHS backup started. As what I was doing was low-impact, I decided to let the backup run instead of postponing it. After a couple hours, I noticed that not only was the backup not done, but the progress bar was crawling along far more slowly than I remembered it being originally. It was time to investigate.

As a first step, I remoted into the server and pulled up taskmgr. On the Processes tab, no process (even demigrator.exe and whsbackup.exe, processes that are usually heavily worked during normal WHS activities) appeared to be using more than a few percent of the CPU at a time, but the aggregate CPU Usage listed at the bottom appeared pegged at 50%. As the server is dual-core, that meant an entire core was being sucked up by something not listed under Processes.

Experience suggested this was almost certainly due to hardware interrupts, but Task Manager doesn’t show CPU usage due to hardware interrupts explicitly. Process Explorer, however, does, and downloading and running ProcExp confirmed the abnormal hardware interrupts.

At this point I could have tracked down the precise source of the interrupts with a tool like kernrate. (Adi Oltean provides a good guide on how to use it.) However, I decided to use a little intuitive problem solving first. Hardware interrupt issues tend be caused by two things:

- Broken drivers

- Hardware failures

While the drivers haven’t been updated in a while on that server, and may have bugs that have been fixed in newer versions, my usage patterns with the server have been pretty static. It was unlikely that I only recently uncovered a bug in the driver if I hadn’t seen it up to now. Further, with the number of hard drives in the box, I wouldn’t have been surprised that one of them was failing.

I checked the System event log, but found no recent errors that would suggest a failing disk (e.g. atapi errors). I did find errors from a few weeks ago that correlated to when I thought one of my drives was failing; I had been able to correct that by reseating the loose SATA cable and no errors appeared after that point.

Might my problem still be related to that, though? Windows Home Server, because it’s based on Windows Server 2003, generally doesn’t allow one to run in AHCI mode for the disks; I had my BIOS configured to run the disk controllers in IDE mode. The Windows IDE driver (atapi.sys) automatically adjusts the transfer mode for a channel when it runs into errors talking to the disk on that channel, using slower and slower DMA modes until it gives up and switches to PIO mode. PIO mode requires a lot of hardware interrupts to transfer data; that’s why DMA mode was introduced in the first place.

At that point, the problem was obvious. When the ATAPI driver detected the drive errors a few weeks ago, it put the channel into PIO mode in an attempt to eliminate the errors. I fixed the errors by reseating the cable, but never reset the IDE channel to which the drive was connected, so it was still in PIO mode, and thus generating a plethora of hardware interrupts. I confirmed this hypothesis in Device Manager; the Secondary IDE Channel‘s Properties->Advanced Settings dialog showed the Current Transfer Mode as ‘PIO Mode‘.

To fix it, I simply uninstalled the Secondary IDE Channel and rebooted the server to let it redetect the channel. After reboot and redetection, the Current Transfer Mode was restored to ‘Ultra DMA Mode 6‘. Sure enough, hardware interrupts dropped to next-to-nothing, and backup speeds were restored to their former glory.

How to mount ISO files from a share in Hyper-V

September 1, 2009 — Scott HavensI’m a huge fan of Windows Home Server. I love the easy-to-set-up image- and file-based backup system, and I love the ability to just slap in another 2TB drive if I start getting low on space. In addition to music, movies, photos, and personal documents, I keep all the software install packages and ISOs I’ve downloaded on my server. Further, with the user account synchronization built into WHS, I’ve been able to simplify my home network and no longer maintain any (permanent) Active Directory domains.

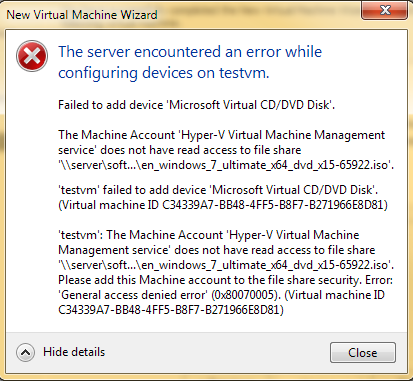

This setup has worked pretty well up to now. In VMWare Workstation, when I want to build a new VM, I just mount the appropriate OS .ISO from \\server\Software\ and boot to the DVD. Hyper-V, though, doesn’t like to mount ISOs over the network. On a fresh Hyper-V R2 install, when I tried to mount a Win7 iso for a new guest, I was greeted with the following error:

In an Active Directory environment, there is a documented solution for this; when one is also managing the Hyper-V host remotely, additional configuration is required that involves constrained delegation.

In an environment without Active Directory (like my home network), or when the machines in question are in domains that don’t talk with each other, we need something else. One option is to enable anonymous access to the share where the ISOs are stored. This solution is fine for my home network, and may be feasible for other small networks where security isn’t as much of an issue. While the instructions below are for Windows Home Server specifically, they are easily adapted to a bog-standard (non-WHS) file server.

- First, go to

Administrative Tools->Local Security Policy.In

Security Settings/Local Policies/Security Options, make the following changes:–

Network Access: Do not allow anonymous enumeration of SAM accounts and shares–Disabled

–Network Access: Let Everyone permissions apply to anonymous users–Enabled

–Network Access: Restrict anonymous access to Named Pipes and Shares–Disabled

–Network Access: Shares that can be accessed anonymously– AddSOFTWARE(or the appropriate share) to the existing listIn

Security Settings/Local Policies/User Rights Assignment:–

Access this computer from a network– AddANONYMOUS LOGONandEveryoneif they’re not already there - After closing the Local Security Settings window you’ll need to reboot the server or force application of security policy via

gpupdate. - Then, open up Computer Management and go to

System Tools->Local Users and Groups->Groups.

Windows Home Server creates several security groups that provide read-only and read/write access to the shares it manages. Find which group offers Read-Only access to the share and addEveryoneto this group. On my computer, the Software share is managed byRO_8andRW_8, so I addedEveryoneto theRO_8group. - While you’re in Computer Management, go to

System Tools->Shared Folders->Shares. In the properties for the appropriate share, addEveryoneto theShare Permissions.

After following these steps, I was able to mount ISOs from the share successfully in the Hyper-V Manager.

Unfortunately, this solution has a caveat beyond just the security implications. Windows Home Server likes you to manage everything through its interface. If you’ve made changes out of band, WHS is happy to ‘fix’ them for you. After every reboot, WHS removes the Everyone token from both the security group and from the share permissions. This means that every time I reboot, I have to perform steps 3 and 4 again. This is frustrating enough that I’ve considered writing a script for this, but I reboot the server so rarely that I haven’t bothered.

Can I mount IMG files in Hyper-V?

August 31, 2009 — Scott HavensThe vast majority of the software I use on a regular basis is packaged as either standalone executables, MSIs, or ISO images. However, the Team Foundation Server client for Visual Studio 2005 (yes, I still have to use 2K5 regularly) is in an IMG file. With VMWare Workstation, this wasn’t a problem; IMG images could be mounted just as easily as ISO images. Hyper-V (and R2), unfortunately, is limited to mounting ISOs. So how do I get the VSTF client installed on my Hyper-V guest?

Given the rarity of IMG files nowadays, I decided to settle for a one-off workaround, and convert my IMG to an ISO that would be broadly compatible with any image mounting software, including Hyper-V. Several options are available for this; I used MagicISO to do the conversion. The entire process, from download to new ISO, took only a few minutes, and I was able to successfully mount what had originally been an IMG image.

Which virtualization platform is right for me?

August 31, 2009 — Scott HavensAs a developer working primarily with Microsoft technologies, I would love for Microsoft to provide a proper desktop virtualization solution, i.e. a virtualization platform that can be used directly on a desktop computer. I started with various versions of Virtual PC and Virtual Server, but they have all had significant shortcomings. Even the latest Virtual PC is still essentially useless for developers, as it still doesn’t support 64-bit guests, much less several other desirable features such as multiple CPUs per guest. Thus, I’ve been using VMWare Workstation for my primary virtualization solution for a while now. It supports 64-bit and multiple cpus per guest, and sports a reasonably slick interface. I don’t really have any complaints about it other than the two-CPU-max-per-guest limitation.

However, a lot of my recent work has involved writing code that scales well to 4 or 8 threads. That’s hard to test properly in an environment that supports only 2 threads. Plus, I work around mostly Microsofties, so I would like some reason to get off a VMWare platform, or at least be able to explain why the Microsoft solutions were all inferior. So, now that Windows 7 and Server 2008 R2 are RTM, it’s time to try using Hyper-V for my desktop virtualization platform. I’ve already run into (and solved) several problems; I’ll detail those and any future problems that pop up here.